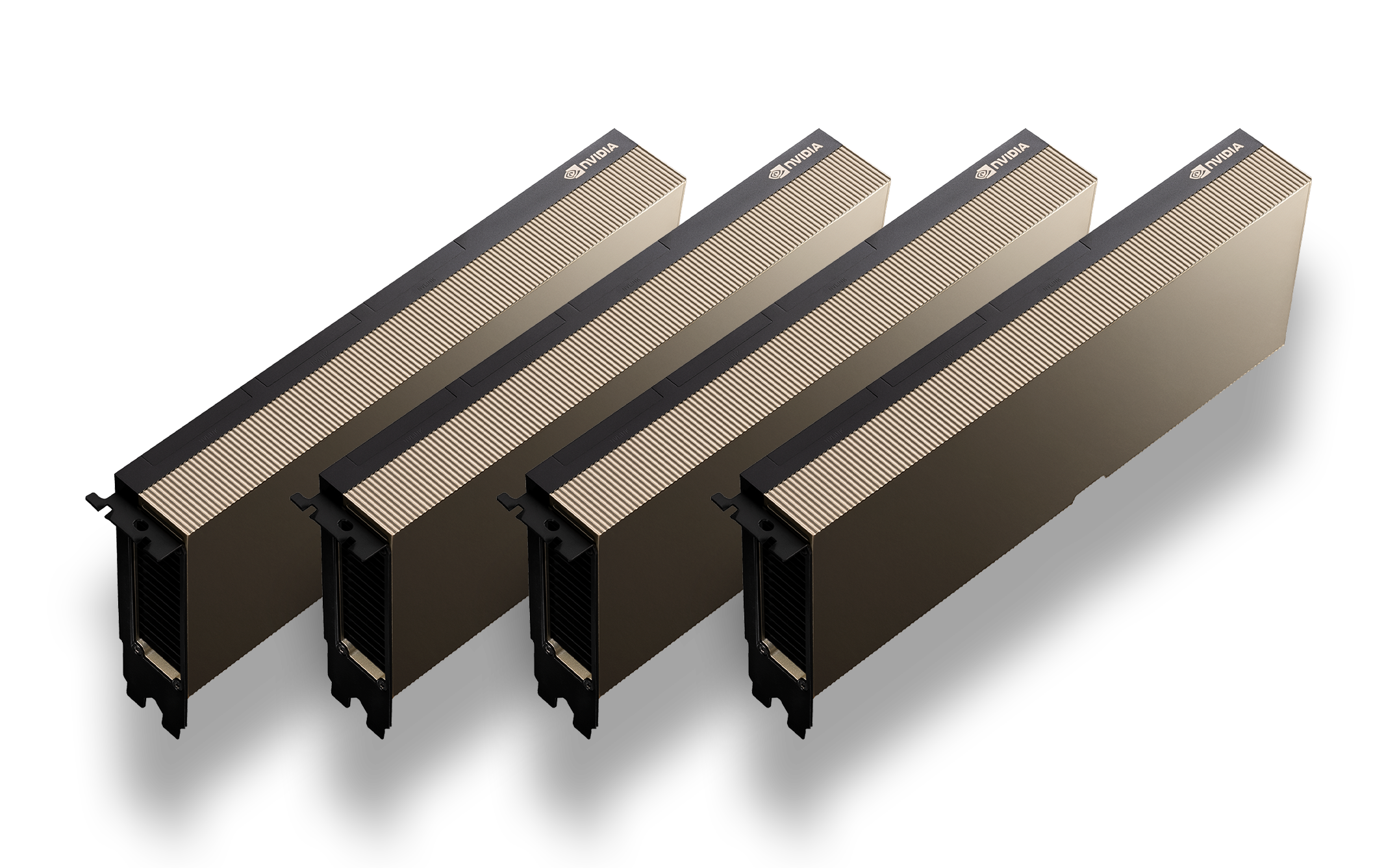

NVIDIA H100 Tensor Core GPU

The flagship data center GPU and the primary engine for the world’s AI infrastructure. Based on the latest NVIDIA Hopper architecture and available in PCIe and SXM form factors.

First AI powered by NVIDIA H100 Systems

First AI Echelon

Fully integrated GPU clusters for two or more Scalar or Hyperplane nodes.

First AI offers NVIDIA DGX™ AI supercomputing solutions

Whether creating quality customer experiences, delivering better patient outcomes, or streamlining the supply chain,

enterprises need infrastructure that can deliver AI-powered insights. NVIDIA DGX™ systems deliver the

world’s leading solutions for enterprise AI supercomputing at scale.

NVIDIA DGX™ H100

The fourth generation of the world's most advanced AI system, providing maximum performance.

NVIDIA DGX™ BasePOD

Industry-standard infrastructure designs for the AI enterprise, offered with a choice of proven storage technology partners.

NVIDIA DGX™ SuperPOD

Turnkey, full-stack, industry-leading infrastructure solution for the fastest path to AI innovation at scale.

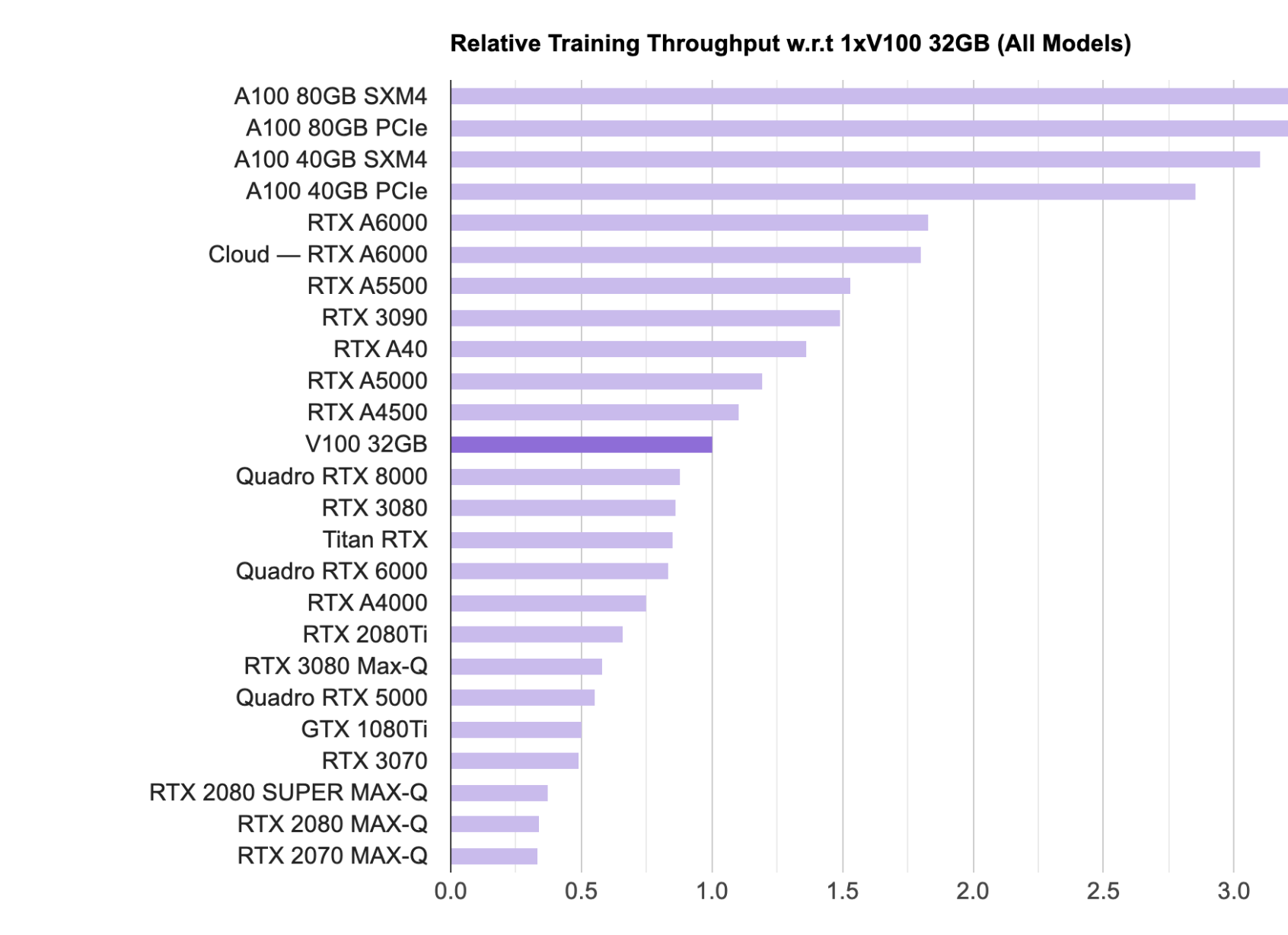

First AI GPU comparisons

First AI's GPU benchmarks for deep learning are run on more than a dozen different GPU types in multiple configurations. GPU performance is measured running models for computer vision (CV), natural language processing (NLP), text-to-speech (TTS), and more. Visit our benchmarks page to get started.

|

|

|

|---|---|---|

| Form Factor | H100 SXM | H100 PCIe |

| GPU memory | 80 GB | 80 GB |

| GPU memory bandwidth | 3.35TB/s | 2 TB/s |

| Max thermal design power (TDP) | Up to 700W (configurable) | 300-350W (configurable) |

| Multi-instance GPUs | Up to 7 MIGS @ 10 GB each. | |

| Form factor | SXM | PCIe Dual-slot air-cooled |

| Interconnect | NVLink: 900GB/s PCIe Gen5: 128GB/s | NVLink: 600GB/s PCIe Gen5: 128GB/s |

| Server options | First AI Hyperplane | First AI Scalar |

| NVIDIA AI Enterprise | Add-on | Included |

First AI On-Demand Cloud powered by NVIDIA H100 GPUs

NOW AVAILABLE

On-demand HGX H100 systems with 8x NVIDIA H100 SXM GPUs are now available on First AI Cloud for only $2.59/hr/GPU. With H100 SXM you get:

- More flexibility for users looking for more compute power to build and fine-tune generative AI models

- Enhanced scalability

- High-bandwidth GPU-to-GPU communication

- Optimal performance density

First AI Cloud also has 1x NVIDIA H100 PCIe GPU instances at just $1.99/hr/GPU for smaller experiments.

Get a Quote

Fill out the form below and we'll be in touch shortly

Resources for deep learning

Explore First AI's deep learning materials including blog, technical documentation, research and more. We've curated a diverse set of resources just for ML and AI professionals to help you on your journey.